Quantum computing represents one of the most revolutionary technological frontiers of our time, promising to transform how we process information and solve complex problems. Unlike the classical computers we use daily, which process information in binary bits of 0s and 1s, quantum computers harness the bizarre and counterintuitive principles of quantum mechanics to perform calculations at unprecedented speeds.

At its core, quantum computing leverages the strange behavior of subatomic particles to create computational systems that can exist in multiple states simultaneously. This fundamental difference allows quantum computers to explore many possible solutions to a problem at once, rather than checking each possibility sequentially like traditional computers. The potential implications are staggering – problems that would take today’s most powerful supercomputers thousands or even millions of years to solve could potentially be completed in minutes or hours.

The field emerged in the 1980s when scientists discovered that certain computational problems could be tackled more efficiently using quantum algorithms than classical approaches. Today, major technology companies including IBM, Google, Microsoft, and Intel are investing billions of dollars in quantum research, recognizing its transformative potential across industries from cryptography and drug discovery to financial modeling and artificial intelligence.

While quantum computers remain largely experimental and face significant technical challenges, understanding their basic principles is becoming increasingly important as we stand on the brink of the quantum computing era. This technology promises to unlock computational capabilities that could revolutionize everything from medical research to climate modeling, making quantum literacy essential for anyone interested in the future of technology.

What Makes Quantum Computing Different from Classical Computing

The fundamental distinction between quantum and classical computing lies in how they store and process information. Classical computers use bits as their basic unit of information, with each bit existing in one of two definite states: either 0 or 1. These bits are manipulated through logic gates to perform calculations sequentially, processing one operation at a time.

Quantum computers, however, use quantum bits or qubits as their basic information unit. Unlike classical bits, qubits can exist in a state called superposition, where they can be both 0 and 1 simultaneously. This isn’t merely a theoretical concept – it’s a measurable quantum mechanical property that allows qubits to encode exponentially more information than classical bits.

When qubits are combined, their computational power scales exponentially. Two qubits can compute with four pieces of information simultaneously, three qubits can handle eight, and four qubits can process sixteen different states at once. This exponential scaling is what gives quantum computers their potential for massive computational advantages over classical systems.

The probabilistic nature of quantum computing also sets it apart from classical computation. While traditional computers are deterministic and provide singular, precise answers, quantum computers are probabilistic, finding the most likely solution to a problem through quantum interference effects. This approach might seem less precise, but for incredibly complex problems, it could potentially save hundreds of thousands of years of traditional computation time.

Core Principles of Quantum Mechanics in Computing

Quantum computing is built on four fundamental principles of quantum mechanics that enable its extraordinary computational capabilities.

Superposition is perhaps the most famous quantum principle, allowing qubits to exist in multiple states simultaneously. When a qubit is in superposition, it’s not simply switching rapidly between 0 and 1 – it genuinely exists in a weighted combination of both states until measured. This property enables quantum computers to explore multiple solution paths simultaneously.

Quantum entanglement creates mysterious correlations between qubits, even when they’re physically separated. When qubits become entangled, measuring one qubit immediately determines the state of its entangled partner, regardless of the distance between them. This phenomenon allows quantum computers to perform coordinated operations across multiple qubits, amplifying their computational power.

Quantum interference enables quantum computers to amplify correct answers while canceling out incorrect ones. Through carefully designed quantum circuits, many possible outcomes are eliminated through interference, while the desired solutions are amplified. This process is crucial for extracting meaningful results from quantum calculations.

Quantum measurement collapses the superposition state, forcing qubits to “choose” definite values. The art of quantum algorithm design involves manipulating qubits through various operations before measurement to maximize the probability of obtaining the correct answer.

How Quantum Computers Process Information

Quantum computation works fundamentally differently from classical processing. A quantum computer begins by preparing qubits in superposition states, creating a vast space of possible computational paths. Quantum circuits, designed by programmers, then use specialized operations to generate entanglement between qubits and create interference patterns.

These quantum circuits manipulate the probability amplitudes of different quantum states through carefully orchestrated sequences of quantum gates. Unlike classical logic gates that perform definitive operations, quantum gates create subtle changes in probability distributions across all possible states simultaneously.

The quantum algorithm guides this process, determining which operations to perform and in what sequence. Many possible outcomes are systematically canceled out through quantum interference, while others are amplified. The amplified outcomes represent the solutions to the computational problem.

This process allows quantum computers to explore enormous datasets simultaneously with different operations, potentially improving efficiency by many orders of magnitude for specific types of problems. The key advantage lies not in performing individual operations faster, but in exploring vastly more solution spaces in parallel.

Current Applications and Future Potential

While quantum computers remain largely experimental, they show promise for solving specific types of problems that are intractable for classical computers. Cryptography represents one of the most immediate applications, as quantum computers could potentially break many current encryption schemes while also enabling new forms of quantum-secure communication.

Drug discovery and molecular modeling could be revolutionized by quantum computers’ ability to simulate quantum systems naturally. Since molecules and chemical reactions operate according to quantum mechanical principles, quantum computers might solve problems in pharmaceutical research that are beyond classical computational reach.

Financial modeling and optimization problems could benefit from quantum computing’s ability to explore multiple scenarios simultaneously. Complex portfolio optimization, risk analysis, and market modeling could potentially be performed much more efficiently.

Artificial intelligence and machine learning applications are being explored, particularly for problems involving pattern recognition in high-dimensional data spaces. Quantum algorithms might offer exponential speedups for certain machine learning tasks.

Challenges and Limitations

Despite their promise, quantum computers face significant technical challenges. Quantum decoherence occurs when qubits lose their quantum properties due to environmental interference, introducing errors into calculations. Maintaining quantum states requires extremely precise control and isolation from external disturbances.

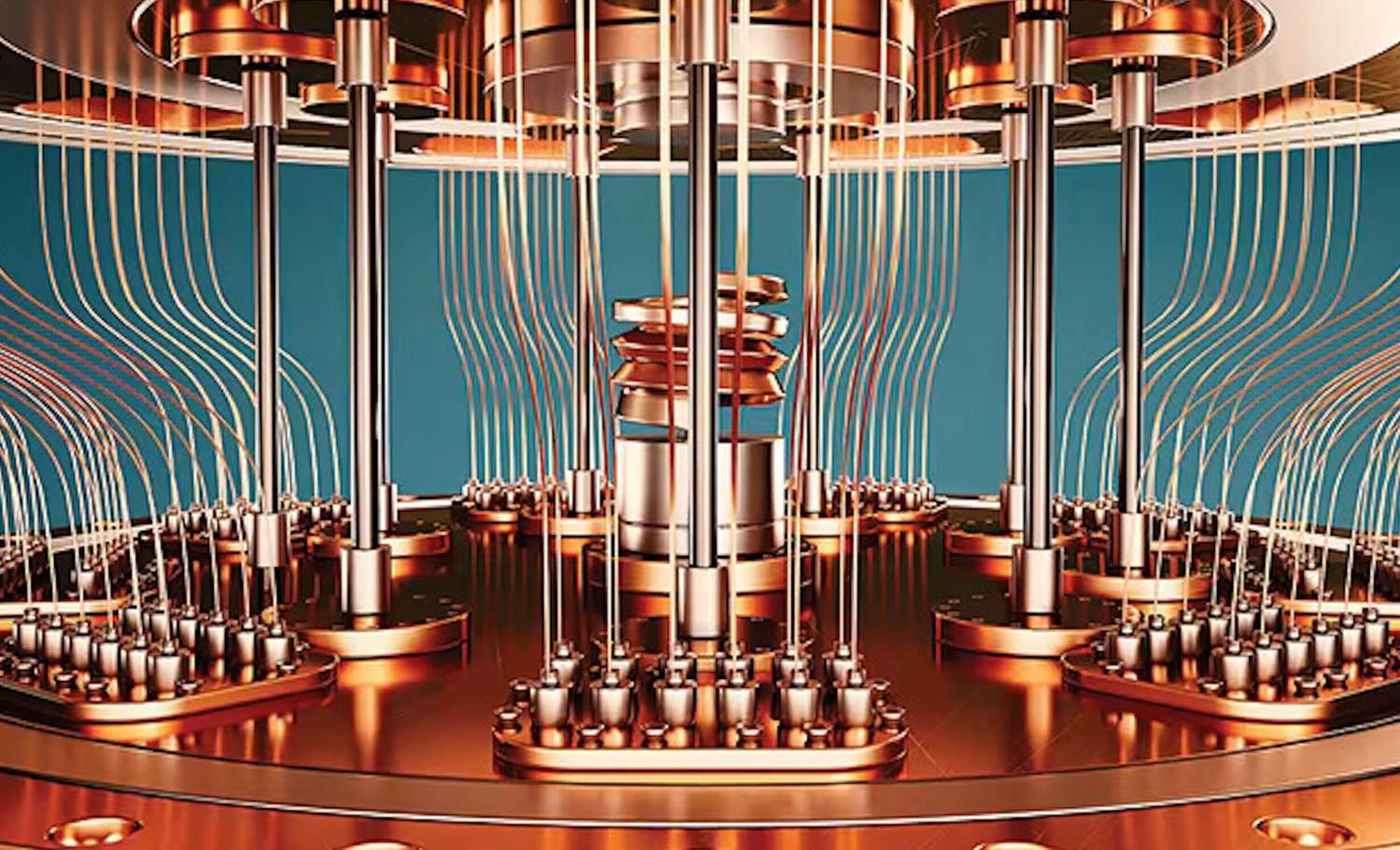

Current quantum computers require extreme operating conditions, often functioning at temperatures near absolute zero to minimize thermal noise. These requirements make quantum computers expensive and technically demanding to operate.

Error rates in current quantum systems remain high compared to classical computers. Quantum error correction requires multiple physical qubits to create one logical qubit, significantly increasing the hardware requirements for practical applications.

The limited scope of quantum advantage means that quantum computers won’t replace classical computers for most everyday tasks. Instead, they’re expected to excel at specific problem types while classical computers continue handling general-purpose computing.

The Road Ahead

Quantum computing stands at a critical juncture between experimental research and practical application. While current systems remain largely impractical for real-world problems, rapid progress in quantum hardware and algorithm development suggests that practical quantum advantage may be achievable within the next decade.

Major technology companies and governments worldwide are investing heavily in quantum research, recognizing its potential to provide significant competitive advantages in various fields. As quantum computers become more stable and error rates decrease, we can expect to see the first practical applications emerge in specialized domains.

The quantum computing revolution won’t happen overnight, but its eventual impact on technology, science, and society could be as profound as the development of classical computers themselves. Understanding these fundamental principles today prepares us for a future where quantum and classical computing work together to solve humanity’s most challenging problems.