Network latency represents one of the most critical yet often misunderstood aspects of modern digital infrastructure. As businesses increasingly rely on cloud-based applications, real-time communications, and data-driven operations, understanding and managing network latency has become essential for maintaining competitive advantage and ensuring optimal user experiences.

At its core, network latency is the delay that occurs when data travels from one point to another across a network. This seemingly simple concept encompasses a complex interplay of physical, technological, and operational factors that can significantly impact everything from website loading times to video conference quality. Whether you’re streaming a video, participating in an online meeting, or simply browsing the web, latency affects every digital interaction you have.

The importance of low latency cannot be overstated in today’s interconnected world. High latency can transform efficient business operations into frustrating bottlenecks, causing applications to degrade in performance or even fail entirely. For organizations operating in real-time environments, such as financial trading, online gaming, or IoT sensor networks, even milliseconds of additional delay can translate into significant operational and financial consequences.

Understanding network latency goes beyond technical specifications; it’s about recognizing how data movement affects business outcomes and user satisfaction. As digital transformation accelerates across industries, the ability to identify, measure, and optimize latency has become a fundamental skill for IT professionals and business leaders alike. This comprehensive exploration will equip you with the knowledge needed to navigate the complexities of network latency and implement effective strategies for optimization.

Understanding Network Latency Fundamentals

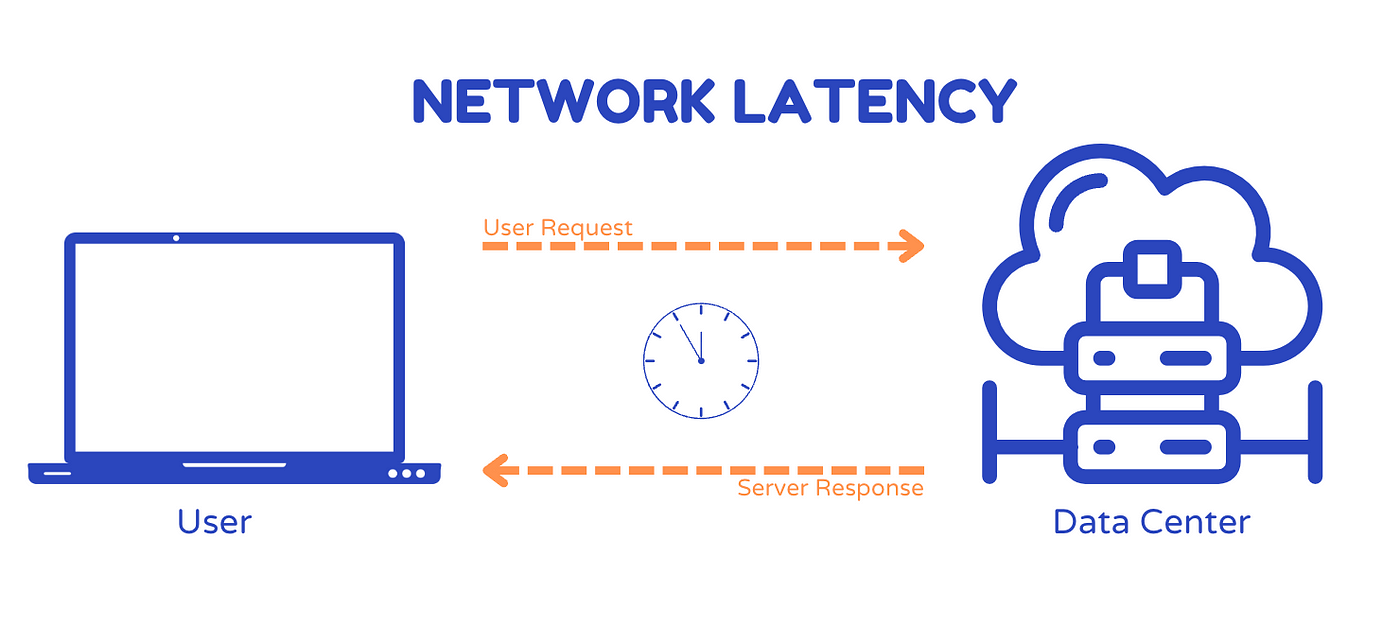

Network latency, sometimes referred to as network lag or delay, measures the time it takes for data to travel from its source to its destination across a network. This delay is typically measured in milliseconds and represents the total time required for a data packet to complete its journey, including processing time at various network devices along the way.

The concept extends beyond simple data transmission time. True network latency encompasses the entire round-trip time (RTT) needed for a request to travel from a client device to a server and back again. This includes not only the physical travel time but also the processing delays encountered at routers, switches, and servers throughout the network path.

Low-latency networks exhibit fast response times with minimal delays, typically ranging from 30-40 milliseconds for optimal performance. Conversely, high-latency networks experience longer delays that can significantly impact application performance and user experience. While latency under 100 milliseconds is generally considered acceptable, the specific requirements vary dramatically depending on the application type and user expectations.

Primary Causes of Network Latency

Physical Infrastructure Limitations

The physical components of network infrastructure play a fundamental role in determining latency levels. Transmission medium selection significantly impacts data transfer speeds. Fiber optic cables offer substantially faster data transmission compared to traditional copper cables, directly reducing propagation delays. Each time data switches between different transmission media, additional milliseconds accumulate in the transmission time.

Network hardware quality also critically affects latency performance. Advanced routers and switches can process and forward packets more efficiently, reducing both processing and queuing delays. Legacy equipment or underpowered devices create bottlenecks that compound latency issues across the entire network path.

Geographic Distance and Network Hops

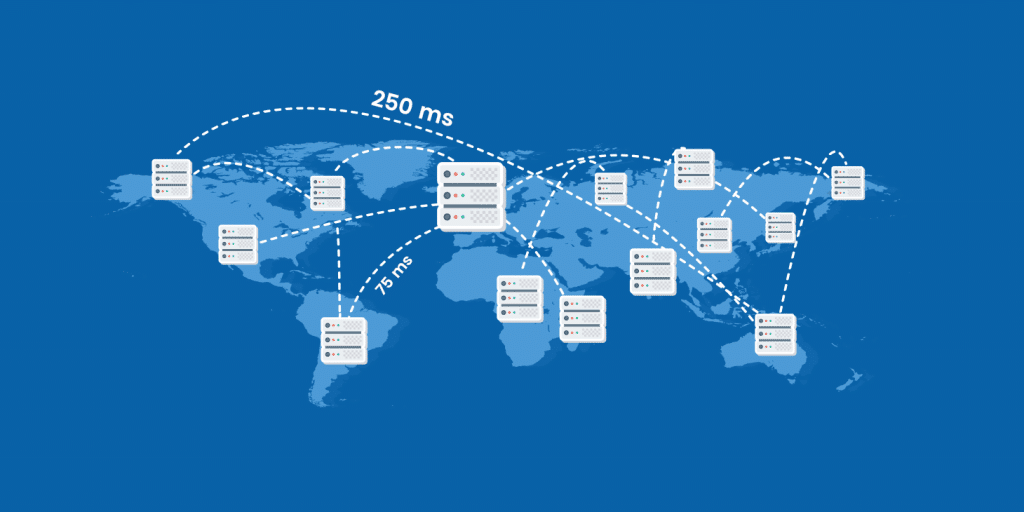

Physical distance between communicating endpoints directly correlates with increased latency. Data traveling from New York to California experiences significantly more delay than data traveling to Philadelphia, potentially adding 40 milliseconds or more to transmission times. This distance-based latency stems from the fundamental physics of signal propagation through transmission media.

Multiple network hops compound distance-related delays. Each intermediate router must process packet headers, consult routing tables, and forward data to the next network node. These processing steps accumulate across the entire network path, with each hop adding incremental delays that can substantially impact performance.

Network Congestion and Traffic Volume

High traffic volumes create network congestion that dramatically increases latency. When network devices reach their processing capacity limits, data packets must wait in queues before being forwarded to their destinations. This queuing delay becomes particularly problematic during peak usage periods when multiple users compete for limited network resources.

Bandwidth limitations exacerbate congestion-related latency issues. Networks with insufficient bandwidth capacity cannot handle traffic surges effectively, forcing packets to wait longer for transmission opportunities. This creates a cascading effect where increased latency leads to retransmissions, further amplifying congestion problems.

Types of Network Delay Components

Network latency consists of four distinct delay components, each contributing to transmission time:

Processing delay represents the time required for routers to examine packet headers and determine appropriate forwarding actions. Modern high-performance routers minimize this delay through optimized hardware and efficient routing algorithms.

Queuing delay occurs when packets wait in router buffers before being transmitted. This variable delay depends heavily on current network traffic levels and can fluctuate significantly based on congestion conditions.

Transmission delay reflects the time needed to push packet bits onto the transmission link. This delay depends on packet size and link bandwidth capacity, with larger packets requiring proportionally more transmission time.

Propagation delay represents the fundamental physical time required for signals to travel through transmission media. This delay is governed by the speed of light and cannot be eliminated, only minimized through optimal routing and infrastructure placement.

Impact on Different Applications and Services

Real-Time Communications

Voice over IP (VoIP) and video conferencing applications are particularly sensitive to latency variations. High latency creates noticeable delays in conversations, making natural communication difficult and reducing user satisfaction. Jitter, or variability in packet delay, can cause audio dropouts and video quality degradation that severely impacts communication effectiveness.

Cloud Services and Applications

Cloud-based applications experience performance degradation when latency increases between client devices and remote servers. This is especially problematic for interactive applications that require frequent client-server communication. Optimized cloud services require careful consideration of server placement and network path optimization to maintain acceptable performance levels.

Gaming and Interactive Media

Online gaming represents one of the most latency-sensitive application categories. High latency creates lag that can make games unplayable, particularly in competitive environments where split-second timing determines outcomes. Streaming services also suffer from latency issues, with high delays causing buffering and quality reduction.

Measuring and Monitoring Latency

Ping testing provides the most common method for measuring network latency. The ping command sends Internet Control Message Protocol (ICMP) packets to target devices and measures round-trip times. This simple tool offers valuable insights into basic connectivity and latency performance.

More sophisticated monitoring approaches involve continuous latency measurement across multiple network paths and endpoints. Network monitoring tools can track latency trends over time, identify performance degradation patterns, and alert administrators to potential issues before they impact users.

Strategies for Latency Optimization

Infrastructure Improvements

Upgrading network hardware to higher-performance routers and switches can significantly reduce processing and queuing delays. Implementing fiber optic connections where possible eliminates transmission medium bottlenecks and provides substantial latency improvements.

Geographic Optimization

Content Delivery Networks (CDNs) reduce latency by positioning content servers closer to end users. This geographic distribution minimizes the physical distance data must travel, directly reducing propagation delays and improving user experience.

Traffic Management

Implementing Quality of Service (QoS) policies allows network administrators to prioritize latency-sensitive traffic over less critical data flows. This ensures that real-time applications receive preferential treatment during periods of network congestion.

Protocol optimization can also reduce latency by minimizing unnecessary communication overhead. Selecting appropriate protocols for specific applications—such as UDP for real-time streaming versus TCP for reliable data transfer, can significantly impact latency performance.