Understanding network performance metrics is crucial in today’s digital where seamless connectivity determines business success and user satisfaction. Whether you’re streaming a video conference, playing online games, or simply browsing the web, three fundamental metrics silently influence your experience: ping, jitter, and packet loss. These interconnected measurements serve as the vital signs of network health, revealing how efficiently data travels across the internet infrastructure.

Ping represents the heartbeat of network connectivity, measuring how quickly your device can communicate with remote servers. Think of it as the digital equivalent of shouting across a canyon and timing how long it takes to hear your echo return. This simple yet powerful diagnostic tool has been helping network administrators troubleshoot connectivity issues since 1983, when computer scientist Mike Muuss created it at the US Army Research Laboratory.

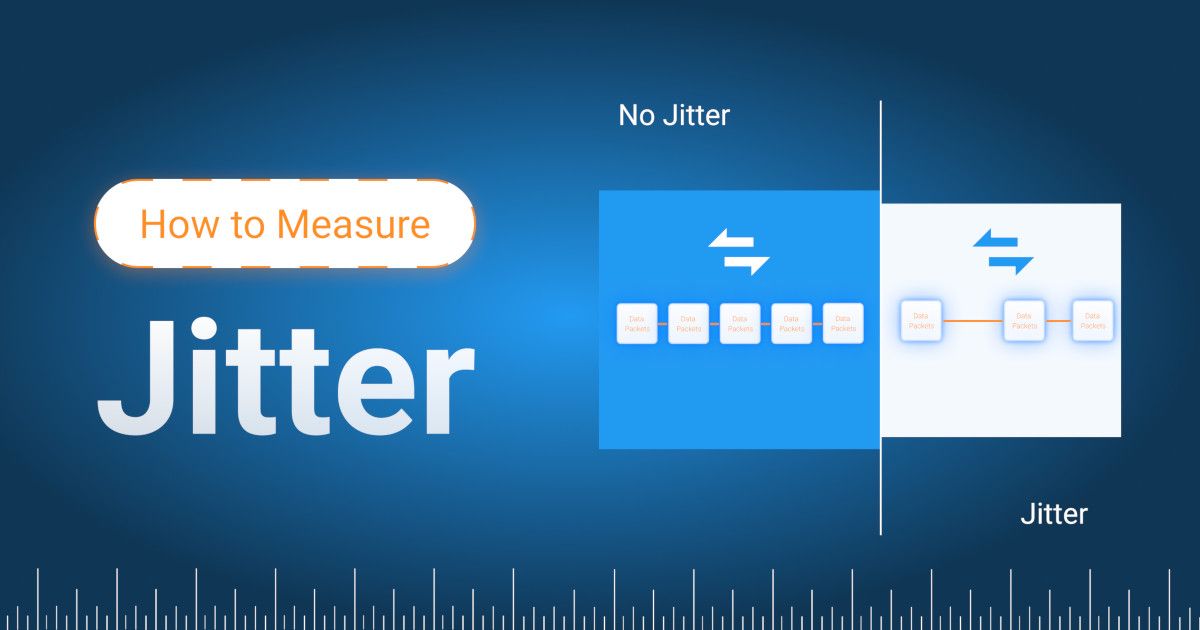

Jitter, on the other hand, measures the consistency of that communication. While ping tells you the average response time, jitter reveals how much that timing varies from packet to packet. It’s the difference between a metronome’s steady beat and an irregular rhythm that disrupts the flow of real-time applications.

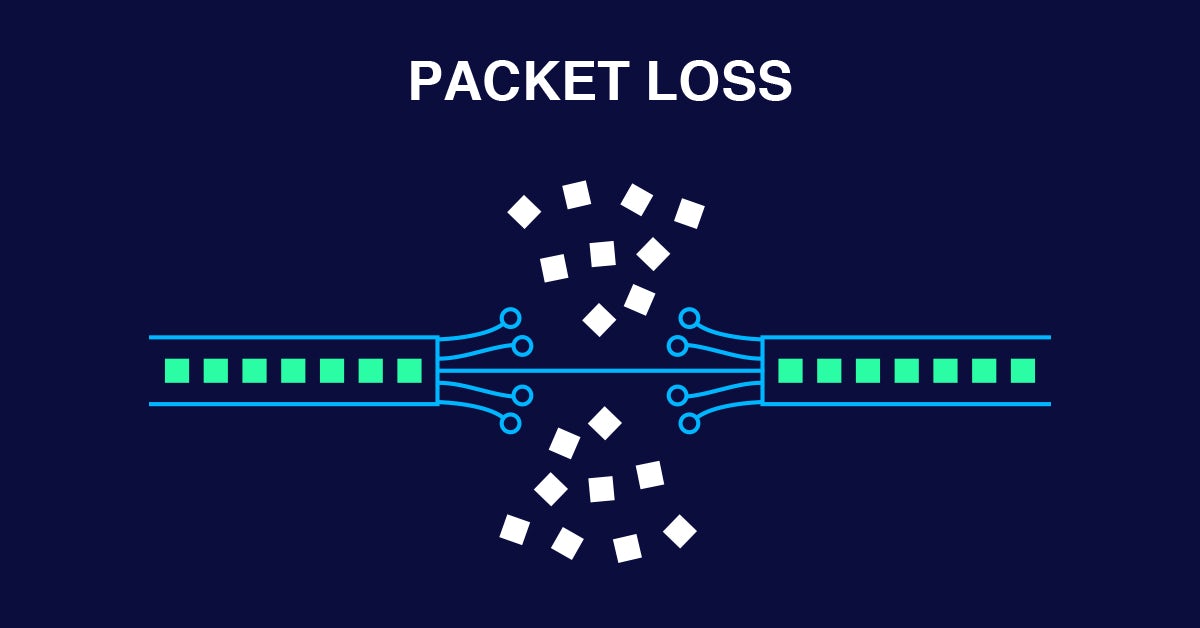

Packet loss represents the most disruptive of these metrics, occurring when data packets fail to reach their intended destination. In a perfect world, every piece of information sent across a network would arrive intact and on time. However, network congestion, hardware failures, and routing issues can cause packets to disappear into the digital void, creating gaps in communication that manifest as frozen video frames, choppy audio, or failed downloads.

Understanding these metrics empowers users and administrators to diagnose network issues, optimize performance, and ensure reliable connectivity for critical applications.

Understanding Ping: The Foundation of Network Diagnostics

Ping operates as a fundamental network diagnostic tool that tests the reachability of hosts on Internet Protocol networks. The utility sends Internet Control Message Protocol (ICMP) echo request packets to a target destination and measures the time required for the corresponding echo reply to return. This round-trip time (RTT) provides valuable insights into network latency and connectivity health.

The ping command reports several key metrics, including minimum, maximum, and average round-trip times, along with packet loss statistics. Network administrators rely on these measurements to identify connectivity issues, assess network performance, and troubleshoot routing problems. The tool’s simplicity makes it accessible across virtually all operating systems, from desktop computers to embedded network devices.

Practical Applications of Ping

Modern ping implementations offer various command-line options that enhance diagnostic capabilities. Users can control payload size, specify the number of test packets, set time-to-live (TTL) limits, and adjust intervals between requests. These features enable targeted testing scenarios, from basic connectivity verification to detailed performance analysis.

The ping utility also supports IPv6 networks through companion tools like ping6, ensuring compatibility with modern internet infrastructure. Additionally, ping can test Domain Name Service (DNS) resolution, helping identify whether connectivity issues stem from network problems or DNS configuration errors.

Jitter: The Consistency Factor in Network Performance

Jitter represents the variation in packet delivery timing across network connections. While latency measures the average time for packets to travel from source to destination, jitter quantifies how much that timing fluctuates between individual packets. This variability directly impacts applications requiring consistent data flow, particularly real-time communications.

Network jitter occurs due to several factor,s including network congestion, routing inefficiencies, and varying transmission paths. When packets encounter different delays along their routes, they arrive at destinations with irregular timing intervals. This inconsistency disrupts the smooth flow of data essential for applications like video conferencing, Voice over IP (VoIP), and online gaming.

Measuring and Managing Jitter

Acceptable jitter levels depend heavily on application requirements. Real-time communication applications typically require jitter measurements of 30 milliseconds or less to maintain quality. Higher jitter values indicate greater variance in packet delivery timing, potentially causing audio distortion, video artifacts, or gameplay disruptions.

Organizations implement several strategies to mitigate jitter effects. Quality of Service (QoS) protocols prioritize critical traffic, ensuring time-sensitive packets receive preferential treatment. Traffic shaping controls data flow to prevent network congestion, while jitter buffers temporarily store incoming packets to smooth out timing variations. These buffers introduce controlled delays that compensate for irregular packet arrival times, ensuring consistent playback quality.

Packet Loss: When Data Disappears

Packet loss occurs when data packets fail to reach their intended destinations within network communications. This phenomenon typically results from network congestion, where intermediate devices like routers and switches become overwhelmed and begin dropping packets. Hardware failures, configuration errors, and routing inefficiencies can also contribute to packet loss scenarios.

The symptoms of packet loss vary depending on the affected applications. In online gaming, packet loss manifests as jerky character movements, timeout errors, and unplayable conditions similar to high latency effects. Video conferencing and VoIP applications experience the “robot effect” with stuttering voices, frozen frames, and complete communication breakdowns during severe packet loss events.

Protocol Responses to Packet Loss

Different network protocols handle packet loss through distinct mechanisms. Transmission Control Protocol (TCP) automatically retransmits dropped packets using either fast retransmission after notifications or timeout-based retransmission. This reliability comes at the cost of increased latency and potential throughput reduction during high packet loss scenarios.

User Datagram Protocol (UDP) takes a different approach, offering no automatic retransmission capabilities. Real-time streaming applications often prefer UDP because they can tolerate some packet loss in exchange for lower latency. Applications requiring UDP retransmission must implement custom recovery mechanisms or switch to TCP for guaranteed delivery.

The Interconnected Relationship

These three metrics work together to define network performance quality. High ping times indicate slow network responses, while excessive jitter disrupts real-time applications regardless of average latency. Packet loss compounds these issues by forcing retransmissions that increase both latency and jitter.

Understanding these relationships helps network administrators prioritize optimization efforts. Addressing network congestion simultaneously improves all three metrics, while targeted solutions like QoS implementation can specifically reduce jitter without necessarily improving ping times.

Monitoring and Optimization Strategies

Regular monitoring of ping, jitter, and packet loss provides early warning signs of network degradation. Businesses relying on real-time applications should establish baseline measurements and implement alerting systems for metric thresholds. Comprehensive network monitoring tools can track these metrics continuously, enabling proactive maintenance and optimization.

Optimization strategies include infrastructure upgrades, bandwidth increases, and protocol optimization. Implementing proper QoS policies ensures critical applications receive necessary network resources, while traffic shaping prevents congestion-induced performance degradation.